Conversational AI Chatbots Are Smart But Safe Too?

- November 11, 2021

- Priyanka Shah

- Conversational AI

From workspace to home space, chatbots have entered everywhere. Alexa to Siri, all are our friends now. Every single day we give so much information related to us, our life and what work we do to these bot buddies so that they not only understand us but use the same information to answer all our queries. Machines have now started answering back and are all over the networks to better serve the customers. But these technological advances come with a loophole- Security Threats!

Since chatbot technology is responsible for collecting and safeguarding personal information, they are naturally an attraction for hackers and other malicious software. While there is increasing threat, the demand for conversational AI chatbots is also skyrocketing to automate responses and decrease waiting time with full personalized experience.

What is meant by Conversational AI Security?

Conversational AI Security is a branch of artificial intelligence which focuses on the security implications of conversational AI systems. It covers topics such as natural language processing, speech recognition, and machine learning techniques in order to analyse and detect malicious intent in digital conversations. This technology can be used to identify anomalies in conversations such as suspicious vocabulary or certain types of behaviour that may indicate malicious intent. Additionally, Conversational AI Security helps protect user experience by ensuring that personal data remains secure while interacting with conversational AI systems, and helps mitigate the risk of attacks such as phishing or data theft. By integrating this technology into existing security frameworks, companies are better able to identify potential threats before they become serious problems.

What are the risks connected with Conversational AI chatbots?

The risks associated with Conversational AI chatbots are considerable. Chatbot assistants and virtual assistant chatbots are both based on artificial intelligence technology, but there are some key differences between them. These chatbots are designed to understand natural language and respond in a conversational manner, whereas virtual assistant chatbots are designed simply to provide information or take action on behalf of the user. As such, the risk with AI chatbots is that they may not be able to accurately interpret and respond to user interactions, resulting in incorrect or inaccurate responses. Additionally, if not properly managed and monitored, these bots can become too intrusive or even disrespectful of users’ privacy. Finally, given their complexity, cost and maintenance requirements may also be a concern when opting for conversational AI chatbots.

What are the threats that a conversational AI enabled chatbot might face?

Here’s a list:

1. Malware

Everything from viruses, Trojan horses to adware, are included in Malware. Malware is specifically designed to damage your system and the pre-existing files or to steal any type of data. Software like Trojan horses might appear legit on first glance or from a legitimate website but once it enters your system, it duplicates files, copies data and everything else to shut down your system.

2. Viruses

We’ve all heard about them, and we all have our fears. Viruses are one of the most common security threats and if released into your bot system, it can be used for data theft.

3. Repurposing of Conversational Chatbots

Changing bot flows or the information it stores is a customary practice when comes to cybercrime. The bots serve as a tool to automate mass attacks- such as data theft, serve crashing, use your devices to defraud other people without your consent and knowing.

4. Impersonation of Individuals

Here we are talking about conversation AI enabled chatbots, which are made to talk. People want to talk with these chatbots and share their queries or maybe little life updates. Impersonating to be a bot is not only getting access to sensitive information but also playing with people’s emotions. This type of attack is usually against officials of higher ranks but is equally a scam for common people.

5. Denial of Service attack

Denial of service attack is when your conversational chatbot denies carrying out a task or in general any command. This is when your bot stops listening to your client as well as stops serving them. This can become a huge pitfall for e commerce companies whose chatbots are designed to process and help customers with their queries.

FACTORS BEHIND THESE ATTACKS:

- Unencrypted communications

- Lack of or No HTTP protocols

- No follow up of security protocols by employees

- Back-door access by hackers

- Hosting platform issues

How to Secure Conversational AI Chatbots??

Here’s a list of techniques that can be used to save your bot from turning into an evil one.

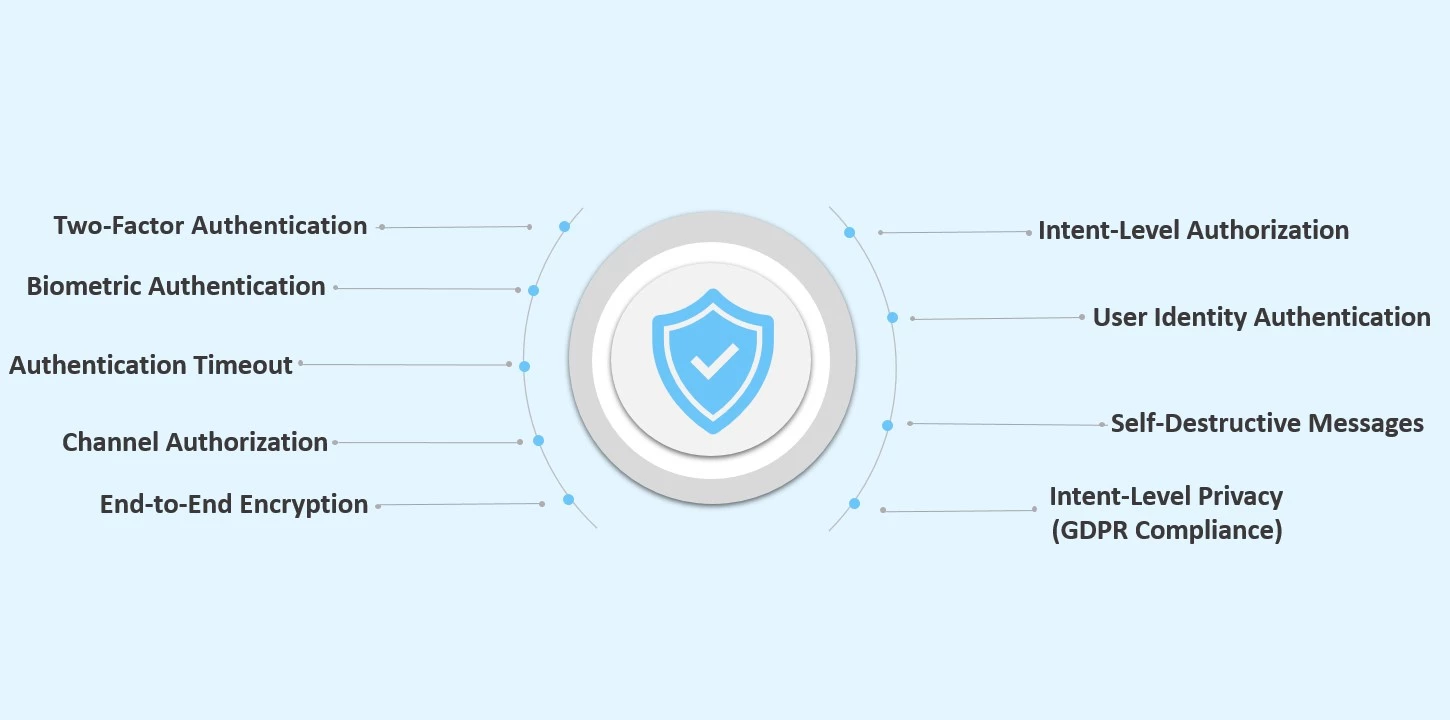

1. Two-Factor Authentication

This is the oldest technique to only allow access to the people “actually allowed”. The basics are here to provide a unique id and password which is asked each time they login. Other addition is to give OTP (One Time Password) each time they login. This ensures that nobody is trying to get access to someone else’s account. Similar authentication of each employee and user can ensure chatbot’s security.

2. Biometric Authentication

Biometric authentication in chatbots is a form of security that utilizes an individual’s unique physical or behavioural characteristics to verify their identity. It is becoming increasingly popular and often used as an efficient and secure way to authenticate users. Biometric authentication can be used with voice recognition, fingerprint scanning, facial recognition, and iris scanning. As chatbots are becoming increasingly popular for customer service purposes, biometric authentication is an ideal way to protect users from fraud or malicious activities.

3. Authentication Timeout

Authentication timeouts in chatbot refer to the amount of time a user is allowed to stay logged into a chatbot session. After that timeout period has elapsed, the user must re-authenticate by providing valid credentials such as username and password. This feature is used to ensure that users remain secure on the system and unauthorized access is not granted. It also helps to reduce the chances of malicious users exploiting the system.

4. Channel Authorization

AI-powered chatbots have a extraordinary comfort of being available in several channels like Skype for Business, Microsoft Teams, Facebook, Slack, etc. Channel authorization is a process that organizations use to manage access to their digital resources. It ensures that only those with the necessary permissions have access to resources and prevents unauthorized people from accessing confidential information.

5. End-to-End Encryption

Encryption is to convert the message in such an unreadable form which only the sender and receiver can decrypt and read. This stops anyone else from seeing any part of the text sent. This is being widely adopted by basic chatbots designers and is without a doubt one of the most robust methods of ensuring Chatbot security. It’s a key feature of chat services like WhatsApp and large tech developers have been keen to guarantee the security of such encryption, even when challenged by national governments.

6. Intent level authorization

Intent level authorization is an authentication solution that defines the access rights of a user to certain resources in a system. It involves determining the purpose or intent behind user inputs request for access, and verifying that their permission level allows them to proceed. This helps organizations protect sensitive data from unauthorized users by allowing only those with the appropriate clearance to access it. Intent level authorization also ensures that all requests are tracked, allowing administrators to audit and monitor user activity. This helps create a secure environment and provides administrators with the necessary tools to detect fraudulent behaviour or security breaches.

7. User Identity Authentication

User Identity Authentication in chatbots is the process of verifying that a user is who they claim to be. This can include verifying an email address or phone number, or using biometric data such as a fingerprint or facial recognition. The purpose of this type of authentication is to ensure that only the intended user can access a chatbot and its features, which helps protect users’ personal information and prevent unauthorized access.

8. Self-Destructive Messages

Self-Erasing or Destructive messages is another successful way to enhance chatbot security. This can be used in cases where users must share sensitive information which needs to be destroyed or deleted within a specific period of time. AI-powered chatbot for banking and insurance can include this feature to better up chatbot security

9. Intent Level Privacy with GDPR Compliance

Intent Level Privacy (GDPR Compliance) in AI-powered virtual chatbot refers to the privacy settings that are used in order to protect personal data and comply with the General Data Protection Regulation (GDPR). The purpose of this is to ensure that all data related to a particular user is kept secure, private, and protected.

For example, when a user interacts with a chatbot they may be asked to provide information such as their name, email address or other contact details. This information should only be used in accordance with GDPR regulations and not shared or sold without the user’s consent. Users have control over how their personal data is used, stored, and shared with the implementation of Intent Level Privacy (GDPR Compliance). It also ensures that any data collected is handled securely and responsibly.

This helps maintain a high level of trust between businesses and their customers, while also helping companies maintain compliance with GDPR regulations.

Different ways to test your security measures?

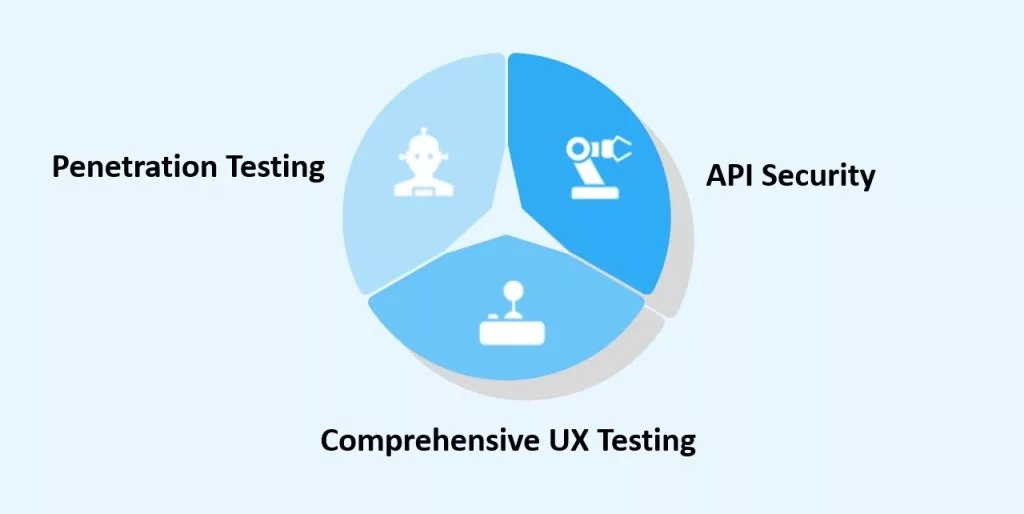

Testing the security of AI virtual assistants is an important part of ensuring that the chatbot is secure and can be trusted to handle sensitive data. To test the security of a chatbot, it is important to use tools:

Penetration testing

Penetration testing for chatbots is a process that evaluates the security of chatbot by simulating an attack or malicious activity. In addition to evaluating the chatbot’s overall security posture, it is used to find systemic flaws and weaknesses that attackers might exploit. During a penetration test, the tester will attempt to break into the system using various methods such as brute force attacks, SQL injections, and other malicious activities. A successful penetration test will result in identifying security flaws and their corresponding solutions. This helps organizations fix any issues before they become exploited by hackers or malicious actors, thus protecting their data and customers from harm.

API security

Chatbots use this process because it helps to ensure that bots are secure from malicious attacks. In order to safeguard the chatbot from unauthorized access, modification, or damage, security protocols and procedures must be tested. This includes authenticating users, validating input data, encrypting communications and other methods of protecting the chatbot from malicious intrusion. Additionally, API security testing can be used to detect any vulnerabilities within the system architecture which could potentially be exploited by cyber criminals. This type of testing is essential for ensuring that your chatbot remains safe and secure so that it can provide its intended services without any disruption.

Comprehensive UX testing

In order to guarantee that users enjoy using the chatbot, thorough UX testing is a crucial step in development process. It involves testing a variety of aspects such as user interface, natural language processing, and conversational flows. A thorough UX test will reveal any usability or functionality problems and will reveal how users interact with the chatbot. It also helps to optimize the chatbot by providing insight into how users expect the bot to behave and respond to their requests. Comprehensive UX testing should be conducted regularly throughout the development process in order to ensure that the chatbot meets both user expectations and provides a smooth and enjoyable experience.

To Sum Up:

Conversational Chatbots are all the rage, but something of a risk too! While these AI enabled conversational chatbots bring another level of excitement and hype in the communication sector, robust and multi-layer protection becomes a must so that customers continue to use these bots with the same hype and no threat.

Learn how Conversational AI can accelerate success in How to Bring Growth in Using Conversational AI.

Kevit.io makes sure that you and your customers always enjoy a secure and safe environment to communicate and that your dataset is safeguarded with required safety. Let’s discuss important safety techniques today itself. Schedule a demo or mail us at coffee@kevit.io and know more about chatbots and security at Kevit.io.

See Kevit.io In Action

Automating business processes with Kevit.io is now just a click away!